Rigs are software

There are a bunch of new ephemeral rig tests at the bottom of this post. If you’re here to see cool rigging and don’t want to read a fairly long rant, scroll down there. Then scroll back up because you should read my rant anyway!

Years ago--I guess it would have been some time in 2007--Anzovin Studio was working with a company called Digital Fish on rigging tools for their animation package Reflex. I’ve probably mentioned Reflex here before--the fact that it was never released publicly is pretty good evidence that God is dead. You might have heard of Digital Fish, since one of the things they do these days is maintain OpenSubdiv.

Reflex did not require you to rig with it’s node graph, and indeed did not (at that time) provide any GUI tools for rigging at all. Instead you’d code rigs in a domain-specific language they’d developed for that purpose. What you could do with that language was extremely open, including defining your own deformers as needed. Coming from a Maya-by-way-of-Lightwave-and-Animation:Master TD background, not a programming background, this sounded insane to me. And indeed, it probably contributed to Reflex’s slow acceptance and eventual dormancy--but not because it was a bad idea. I’ve come to believe that Reflex’s rigging-as-programming approach was in fact precisely the right idea. It was just ahead of its time, and most TDs, including me, weren’t ready to hear it.

Well get ready, because much like the Master of Magnetism was eventually acknowledged to have Made Some Valid Points, we’re all going to have to admit that Reflex Was Right.

The fact is that rigging is programming. It wasn’t necessarily meant to be. I recently encountered someone I hadn’t spoken to for years. He hadn’t had any real contact with the industry since the late 90s, and he asked me if I still did “boning.” (No, seriously, this was an actual term that people used, I’m not making that up.) Go back far enough in time, and rigging really is basically about placing bones and defining deformation and not much else.

But obviously rigging today is nothing like that. Even the simplest modern rig contains an great deal of internal logic about what drives what by what method under what conditions, and whether or not you are actually writing any code that is programming. To be clear, I’m not referring here to scripted auto-rigging. I mean the rig itself is a program. A great deal of the problem with scripted auto-rigging tools--and despite being the designer of a fairly popular Maya autorig tool, I have begun to regard the whole “auto-rigging” concept with suspicion--is that it’s a program that exists only to generate another program. You might even say that an auto-rig tool compiles to a rig, which would be fine except that the auto-rig program is frequently more complex than the rig it’s supposed to generate would be if expressed in code, and that’s not the direction that’s supposed to go.

This idea that rigs are software isn’t new, or something I made up--it’s becoming an increasingly common view. Rafael Fragapane’s Cult of Rig takes that view--part of what makes his approach so interesting is that he’s applying programming concepts like encapsulation to the Maya node graph. Cesar Saez has a great article on how the TD world is bifurcating into people who are fundamentally artists and people who are really software engineers.

Probably to some of the people who read this blog, the idea of just coding a rig from scratch sounds terrifying. The good news is that it actually isn’t! What surprised me about this project is that it was much easier then I’d thought--considerably easier then my earlier, hacky implementation of ephemeral rigging that attempted to get Maya to do a bunch of the work for me. Once again, we see the supposedly more intuitive, “user-friendly” approach turns out to be much more work then just buckling down and doing things the “hard” way.

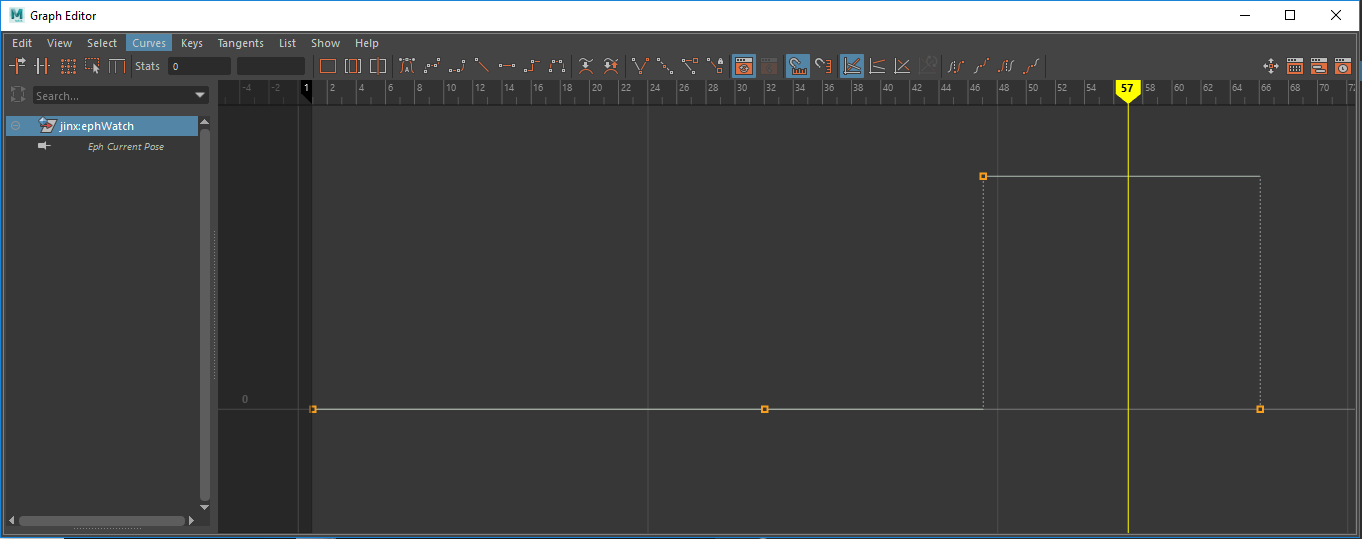

That said, thinking of rigging as programming is more a point of view then a specific practice--it doesn’t necessarily imply that you have to write your rig in Python the way I’m doing now. However, creating your “rig program” purely through the Maya node graph does lock you in to a very specific idea of how the rig can evaluate. I’m not arguing that node graphs are inherently bad—in fact I chose to write my new ephemeral rig system as a graph with nodes, specifically because it’s an easy way to figure out the correct order to evaluate things in. But Maya generally expects everything to evaluate through its graph, a set of rules Maya itself doesn’t always follow.

IK handles are a perfect example. There’s nothing stopping you from making an IK handle that would evaluate through the Maya node graph as long as it isn't cyclic, but they wanted their handle to have two-way interactions with the joints that it drives. So they broke out of Maya’s basic model of scene evaluation, to the eternal consternation of TDs who thought they could reason about the graph by tracing connections. Maya IK handles have worked this way since Maya came out in 1998. That’s how long it took Alias/Wavefront to give up on a consistent scene graph model and just start special casing things—no time at all. They didn’t release a single version of the product before doing so.

So how does my node graph differ from Mayas? Well, for one thing it’s vastly simpler and does an extremely specific task, instead of trying to be the basis for an entire application. To be fair, if I was trying to write a node graph that could support that load, I probably would have failed miserably, since I’m not actually a software engineer!

It’s very lightweight-ness is both the reason why I could write it, and its purpose—it’s so simple that I can destroy and recreate it in different forms as needed without incurring a significant performance penalty or pulling the rug out from under some other aspect of the scene. I also make no assumption that the graph is the only way to evaluate transforms in the ephemeral rig system. It’s used to correctly order evaluation of transforms when it’s important to do so, and ignored when evaluation order is not important.

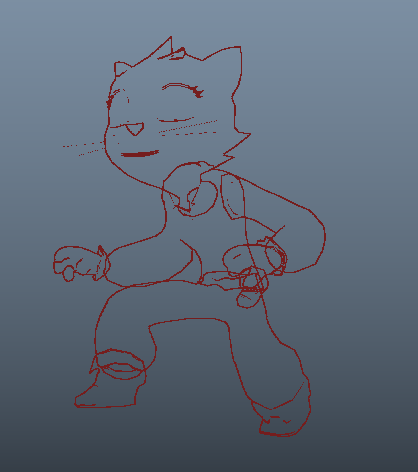

Here’s a couple more examples.

Here I’m doing a reverse foot, ephemeral rig style. Once again, rebuilding the graph lets me switch behaviors in seemingly circular ways easily. Of particular note here is that nothing really needs to change much in order to allow for "backwards kinematics"--I don’t have special controls or attributes. It’s just manipulating the same controls but tracing a different set of connections to build the graph. Any set of controls could be set up to behave that way, just by making appropriate connections that can be followed by the ephemeral DG.

And here’s a tail, showing just how useful the ephemeral rig behavior is at posing arbitrary numbers of controls.

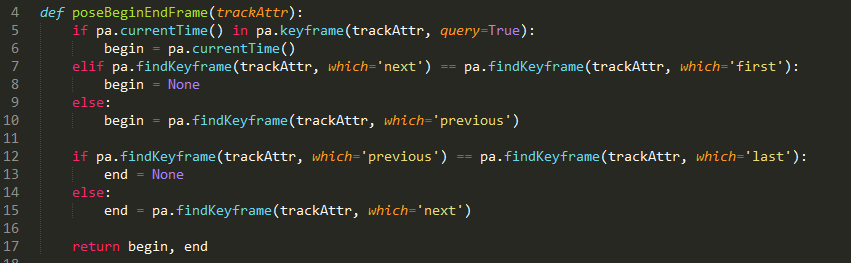

Next time we’ll get into the code, and see how the ephemeral rig DG operates at a low level.